Sep 03, 2025·7 min read

Comparison pages that convert: a fair structure that works

Comparison pages that convert need fair structure, clear proof, and CTAs matched to decision-stage intent. Use this layout to build trust and increase clicks.

Comparison pages that convert need fair structure, clear proof, and CTAs matched to decision-stage intent. Use this layout to build trust and increase clicks.

People land on a comparison page when they’re close to choosing. They don’t want a long story. They want a clear answer to: “Which option fits me, and what do I do next?”

A page that earns trust does three things well:

That’s what “comparison pages that convert” means in practice: the reader takes a sensible action, like checking pricing, starting a trial, booking a demo, or buying.

Most comparison pages fail for the same reasons. Some declare a winner in the first paragraph, so readers stop believing the rest. Others hide the details people truly compare, like limits, pricing rules, support, setup time, integrations, and cancellation terms. And many forget the final piece: after the tradeoffs are clear, there’s no clear next step.

Common failure patterns look like this:

Make a simple promise near the top and keep it: this comparison will be fair, it will focus on what matters, and it will help you decide. A good comparison can still recommend your option, but it earns that recommendation by being useful enough that readers choose willingly.

Decision-stage searches aren’t “what is X?” They’re “X vs Y,” “best for Z,” or “X alternative.” The reader already knows the category. They want help choosing quickly, and they’ll bounce if they have to dig through a long intro.

Most comparison pages fit one of these formats:

Pick one type and commit. A page that tries to be all three usually feels unfocused and less trustworthy.

Then choose one primary action and one backup action. The primary action should match the intent. For an “X vs Y” query, that might be “See pricing” or “Start a trial,” with a backup like “Download the comparison” or “Get a quick recommendation.” More than two actions creates clutter.

Decide what you can truthfully evaluate. If you can’t test performance, don’t imply you did. If pricing changes often, say so and note when you checked.

Example: for “best content API for Next.js,” you can compare documentation quality, SDK availability, and how content gets published. You can also be explicit about what you verified (for example, IndexNow support or translation coverage) instead of guessing.

Fairness isn’t optional on comparison pages. If readers suspect you’re hiding incentives or shaping the result with language tricks, they leave.

Say upfront whether you’re independent, sponsored, or using affiliate links. Put it near the top in plain words. “We may earn a commission if you buy” is clearer than a vague legal paragraph.

Also explain how you judged the options. Readers don’t need a fancy scoring system, but they do need a method they can follow. For example: you tested key tasks, checked pricing on the same date, and compared support and refund terms as written.

A simple disclosure block usually covers what readers care about:

Fairness also means being clear about fit. Tell readers who the page is for and who it isn’t for. One sentence can prevent refunds and frustration.

Keep labels neutral. “Best overall” and “Top pick” are fine if you define criteria. Avoid loaded language like “crushing” or “no-brainer.” Prefer categories like “Lowest starting price,” “Most automation,” or “Best for multilingual sites.”

If you’re comparing content platforms and you publish via a tool like GENERATED, disclose that relationship as well. Readers can handle bias when you’re honest about it.

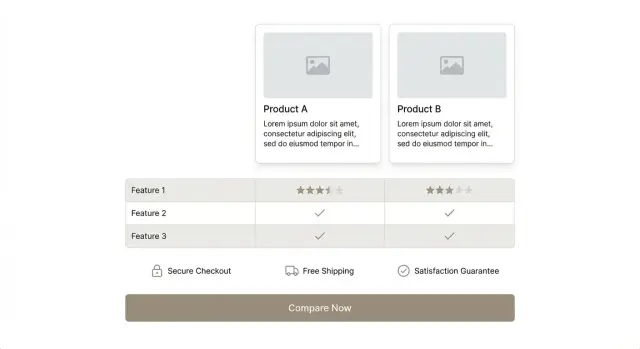

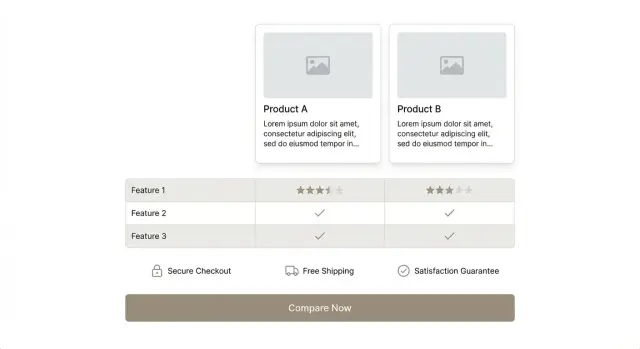

The first screen should answer one question: which option fits me?

Open with 2 to 4 lines that split the audience clearly. For example:

If you want the simplest setup and predictable monthly cost, Option A is usually the better pick. If you need deeper controls and can handle a longer setup, Option B is the better fit.

Then add a small, balanced snapshot with the facts readers look for first: price range, best for, and the biggest limitation. Don’t hide drawbacks. Calling out a real limit yourself reads as honest.

After that, add “top reasons” for each option, kept even. Aim for three reasons each, and make at least one a tradeoff (not a brag). Write it like a friend explaining a choice.

Only then place an early CTA. It should match the takeaway you just gave, like “Check current pricing for Option A” or “Confirm Option B supports your must-have feature.”

The table is where most readers decide whether your page is helpful or biased. It should feel like a tool, not a pitch.

Choose criteria that match real buying decisions, not what a marketing team wants to highlight. In many cases, 6 to 10 criteria is plenty: price model, key limits, setup time, support, security basics, what’s included by default, and cancellation terms.

Strong criteria are easy to verify:

Keep cells measurable, then add a short note for context. One sentence is enough. Notes prevent misunderstandings, like whether a limit resets monthly or if an “included” feature requires a higher tier.

When the honest answer is “depends,” don’t hide it. Say what it depends on and show the rule.

| Criteria | Option A | Option B |

|---|---|---|

| Price model | Per user | Flat fee + usage |

| API access | Yes (rate limit: 60/min) | Yes (rate limit varies by plan) |

| Support | Email (24-48h) | Chat (business hours) |

A simple pattern for “depends” cells: state the default, then the trigger. Example: “Included on Pro. On Basic, available as an add-on.”

Trust isn’t built by generic badges. It’s built by proof readers can check.

Use evidence a reader can verify. A screenshot of settings or a pricing page is often more convincing than a claim. Add a timestamp in the caption (even just month and year) so it doesn’t feel recycled.

Also include the constraints that are often hidden. If you leave out contract terms, add-ons, or tier limits, readers will assume you’re protecting one option.

A simple way to show trust without clutter:

A “Last checked” line is small but effective. Tell readers what you checked, not only when. For example: “Last checked: pricing page, free plan limits, cancellation terms, and support hours.”

Be careful with scoring. If you can’t explain why something got a 7/10, skip the score and write one clear sentence instead. If you do score, keep it consistent and show the criteria beside the score.

If you compare content tools like GENERATED, note the plan level you tested, whether features are included or add-ons, and the date you verified them.

Readers want a decision, not a lesson. Decision rules make the choice feel safe and keep your page fair because you’re not forcing one “best” pick for everyone.

Pick 3 to 5 common situations and recommend the best fit for each. Tie reasons to outcomes (time, risk, cost), not vague claims.

If none of the options is a clean fit, say so. Offer a credible next move: shortlist two and run a 7-day trial using the same checklist, or consider a specialized tool if the main need is narrow.

A strong comparison page is mostly process. When you follow the same steps each time, your pages feel fair, consistent, and easier to update.

Before publishing, do a quick bias and completeness check: “Would a customer of any option feel this is fair?”

Quick checks:

If you publish comparisons at scale, a tool like GENERATED can help keep the structure consistent, generate decision-stage CTAs, and track which sections drive clicks over time.

Comparison pages fail when they feel like a trick. Readers arrive ready to decide, but they still want proof, balance, and a clear next step.

A common issue is pushing action too early. If the first screen is packed with buttons, popups, and “Buy now” language, it signals bias. Earn the click first with a quick summary, the key differences, and who each option is for. Then place one clear CTA after the table and main tradeoffs.

Another trust killer is cherry-picked criteria. If you only compare categories where one option wins, readers notice what’s missing: price limits, contract terms, setup time, support hours. Include the uncomfortable criteria. A fair page can still convert because it helps the right people self-qualify.

Vague claims also backfire. Words like “best,” “fast,” and “easy” sound like ads unless you add specifics: “setup takes about 15 minutes,” “support replies in under 2 hours,” or “includes 10 seats.” If you can’t measure something, explain how you judged it (public documentation, pricing pages, or hands-on testing).

Outdated details quietly hurt conversions. Pricing and features change, so add a “Last checked” date near the table and keep it current. If you publish programmatically (for example, via an API workflow like GENERATED on generated.app), build in reminders or refreshes so the page stays accurate.

Finally, hiding weaknesses is worse than admitting them. If an option lacks a feature, costs more at scale, or needs technical help, say it plainly.

Read your page like a skeptical buyer. The goal is simple: make it easy to choose, and hard to feel misled.

If you publish through a tool like GENERATED, confirm tracking is in place so you can learn what people click and where they hesitate.

A small marketing team is choosing between two project management tools. They have 8 people, a few freelancers, and they need something that won’t turn into a part-time admin job.

They choose four criteria that match how they work day to day: pricing, permissions, integrations, and reporting. They skip the 20 “nice to have” rows that bury the real decision.

Pricing: Tool A is cheaper for small teams, but Tool B includes more features in the base plan. If you know you’ll need advanced reporting soon, Tool B may cost less over a year.

Permissions: Tool B is stronger if you work with freelancers or clients because you can limit what each person sees. Tool A is fine if everyone is internal and permissions aren’t a concern.

Integrations: Tool A wins if your team lives in one chat tool and needs quick, simple automation. Tool B wins if you need more enterprise connections (like SSO or deeper CRM sync).

Reporting: Tool B is better for leaders who want dashboards and workload views. Tool A is enough if you only need basic status and due dates.

Place CTAs right after the summary and again after the table, but adjust the wording to the reader’s goal:

If the reader is still unsure, add a short tie-breaker: “Choose Tool A if you need simple setup and lower cost today. Choose Tool B if permissions and reporting will matter in 3 to 6 months. Still split? Pick the one your team will actually open every day, based on a 10-minute test with a real project.”

Rebuild one comparison page first. Choose a page that already gets some traffic, has clear “vs” intent, and sits close to purchase. If that page improves, you get proof (and momentum) quickly.

After you publish, measure behavior, not only pageviews. A page can rank and still fail if readers don’t trust it or can’t decide.

Track a small set of signals that map to decisions:

Put the page on a refresh schedule. Comparisons go stale quickly. Add a “last checked” date and honor it. If you can’t confirm a detail, say so and explain how you handled it.

A practical rhythm is monthly for high-traffic pages and quarterly for everything else. Each refresh, check the table rows first, then reread your “who this is for” summary to keep it accurate.

If you publish many comparison pages, generated.app (GENERATED) can help produce consistent first drafts, generate aligned CTAs, and support performance tracking. Treat each page like a living asset: publish, learn what readers do, adjust the structure, and keep it current.

Start by telling readers who each option is best for in 2–4 lines, then back it up with specific differences they can verify. Keep the tone neutral, admit real limits on both sides, and only recommend your pick after you’ve shown the tradeoffs clearly.

It usually fails because it feels biased or incomplete. If you declare a winner too early, hide pricing rules or limits, or use vague claims like “best” without specifics, readers stop trusting the page and leave before taking any action.

Pick the format that matches the query. “X vs Y” works for a direct choice, a shortlist works when someone wants options, and a category grid fits when you’re comparing across segments. Don’t mix formats on one page unless you can keep it simple and consistent.

Put a plain disclosure near the top that says whether you’re independent, sponsored, using affiliate links, or comparing your own product. Add a short note about how you evaluated the tools and when you last checked pricing and key policies, so readers can judge the fairness quickly.

Use criteria people truly compare when they’re about to buy, like price model, important limits, setup time, support terms, what’s included by default, and cancellation or refund rules. Keep each row measurable where possible, and add a brief note when a detail has conditions or depends on plan tier.

Use one primary action that matches the reader’s intent, like “See pricing,” “Start a trial,” or “Book a demo,” and one calmer backup action for people who aren’t ready yet. Place the main CTA after you’ve shown the key differences, so it feels like the natural next step instead of a shove.

Call out what you actually verified, use proof readers can check, and add a “last checked” note for details that change often. If you didn’t test something, say so plainly instead of implying you did.

Write a few simple “choose this if…” rules tied to outcomes like time, cost, and risk. This helps readers self-select without you forcing a one-size-fits-all winner, and it reduces refunds because people understand the downsides before they act.

Use the same criteria, wording style, and level of detail for every option, and include at least one real drawback for your preferred choice. Then reread it as if you were a customer of the competitor and ask whether it still feels fair and complete.

Add a clear “last checked” date and refresh the table first, because that’s where stale info does the most damage. A practical cadence is monthly for high-traffic pages and quarterly for the rest, and if you can’t confirm a detail, note that and explain how you handled it.