Jan 07, 2026·6 min read

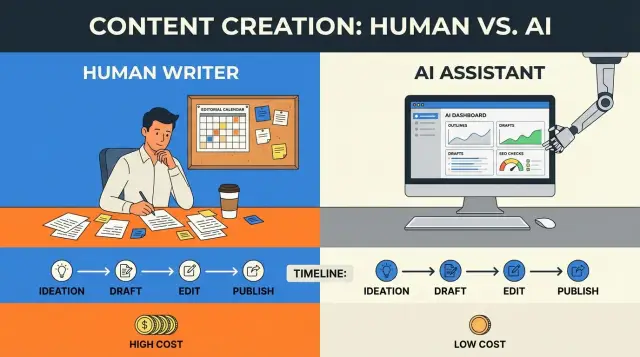

AI-assisted content production vs human-only: time and cost

A practical comparison of human-only and AI-assisted content production, with realistic time and cost tradeoffs, quality risks, and a hybrid workflow.

What you are really comparing (and why it matters)

When people argue about “human-only” vs “AI-assisted,” they’re usually talking past each other. The real comparison isn’t talent. It’s a production system: how ideas become a draft, how that draft becomes publishable, and who’s accountable at each step.

“Human-only content writing” typically means a person does most of the work: research, outline, draft, edits, and final checks. Tools still show up (spellcheck, analytics, stock photos), but the writing decisions stay with the writer and editor.

AI-assisted content production usually means a person is still in charge, but delegates parts of the work to a model: generating an outline, drafting sections, suggesting headlines, rewriting for clarity, or adapting a piece for another format. The deciding factor is who owns the final call on facts, tone, and what actually gets published.

That choice changes three things people care about:

- Speed: how quickly you can go from idea to publish.

- Cost: how many paid hours (and how many people) touch a piece.

- Quality: how accurate, consistent, and useful the content is.

You’re not choosing between “fast and cheap” and “slow but good.” You’re choosing where the time goes. With human-only, more time sits in drafting. With AI-assisted, more time shifts into reviewing, correcting, and shaping output to match your standards.

This matters most when you publish often, cover many topics, or need a consistent voice across multiple writers. It matters less when you publish a few high-stakes pages a year (like a positioning page or a legal statement) where the main cost is careful thinking, not typing.

A simple way to frame it: if your bottleneck is the blank page, AI can help. If your bottleneck is trust and accuracy, you need stronger quality control, no matter who wrote the first draft.

How human-only content production usually works

Human-only content production is a people-first process. It relies on a writer’s judgment at every step: what to include, what to leave out, and how to say it in a way that fits the brand.

Most teams follow the same core stages, even if they don’t call them by name. Someone writes a brief (goal, audience, angle), does research, builds an outline, drafts, edits for clarity and accuracy, then publishes with a final scan for formatting and metadata.

Where does the time go? Often not the first draft. The big hours hide in reading, thinking, and waiting.

A realistic example: a marketer writes a 1,200-word post. Drafting might take 2-3 hours, but research can take just as long if the topic needs stats, quotes, or product details. Then feedback starts. One clean round is fine. Three rounds can double the total time.

Common time sinks include research rabbit holes, rewrites after the angle changes, approval queues (legal, brand, product), and formatting/polish right before publishing.

The strengths are clear. Humans are strong at voice, context, and nuance. A good writer notices when a claim sounds risky, when an example feels off, or when a reader needs a simple explanation.

The tradeoff is scale and consistency. When the backlog grows, publishing slows or quality slips. Different writers also produce different structures and levels of detail. Under pressure, teams skip deep research or editing, which is usually where an article becomes genuinely useful.

How AI-assisted production usually works

AI-assisted content production can be as light as using a chatbot to unblock a paragraph, or as heavy as running an automated pipeline that outputs publish-ready pages. The difference isn’t the tool. It’s where humans step in, and how strict the checks are.

A common flow starts with a human setting intent: who the piece is for, what it should help them do, and what the business wants from it (newsletter signups, demos, rankings, support deflection). AI then turns that intent into usable material quickly.

In most teams, the process ends up looking like: define a clear brief, generate an outline and rough draft, then do a human edit for structure and tone, followed by fact-checking and a final review.

AI helps most in the messy middle. It can produce a decent first draft, create alternative intros and headlines, expand bullet notes into paragraphs, and adapt content into other formats (newsletter version, short summary, social snippets). It’s also good at keeping structure consistent, which matters when you publish a lot.

What still needs a human is anything that carries real risk. AI can sound confident while being wrong, vague, or outdated. A person should own final decisions on claims, numbers, comparisons, legal or medical advice, and anything tied to your reputation. Humans also protect brand voice, since “on-brand” is usually a set of small choices a model won’t hit without tight guidance.

Example: a marketing lead writes a 6-point brief for a product update post. AI turns it into a structured draft and two headline options in minutes. A human then removes risky claims, adds a real customer scenario, checks dates and specs, and makes the tone match the brand before it goes live.

If you’re working at the heavier automation end, a platform like GENERATED (generated.app) can help generate drafts and supporting assets via API, then you keep the same human checkpoints for accuracy and voice.

Time tradeoffs: a realistic breakdown

Time isn’t just “how fast you can type.” It’s the total of research, drafting, editing, plus the hidden time spent waiting on reviews.

For a 1,200-1,800 word article on a normal (not highly technical) topic, time often breaks down like this:

- Research and outlining: 1.5-4 hours

- Draft writing: 2-6 hours

- Editing and fact checks: 1.5-4 hours

- Final polish (headlines, formatting, meta, images): 0.5-2 hours

- Review cycles and changes: 1-6 hours (often spread across days)

AI usually helps most with the first draft and the blank-page problem. With a strong brief, you can often get a usable outline and draft in 20-60 minutes. But the saved time can move into other places: verifying claims, removing generic phrasing, and aligning tone.

AI tends to save time when you need multiple angles quickly, rewrite for a different audience or length, create variations (FAQs, summaries, social versions), translate/adapt for other languages, or work from templates that keep structure consistent.

AI can also add time when a topic needs careful sourcing and the draft includes subtle errors. Fixing “sounds right” mistakes can take longer than writing cleanly from scratch. It also slows down when you don’t have a clear style guide, because you end up re-editing terminology and tone every time.

Review cycles can erase speed gains. A fast draft still stalls if three stakeholders leave conflicting comments a week apart. For most posts, it helps to set one owner and aim for one review round, saving multi-round reviews for high-risk pages.

Batching and templates change timelines more than most people expect. Draft four posts in one sitting using the same outline pattern, then edit them in a separate block. You cut context switching, and reviewers see a familiar structure.

Cost tradeoffs: what you actually pay for

Turn briefs into outlines quickly

Turn a clear brief into multiple structured outline options in minutes.

Cost isn’t just the writer’s invoice. It’s every hour that touches a draft, every delay that pushes a post out of a good publishing window, and every mistake that later shows up as churn, support tickets, or lost trust.

In human-only writing, you typically pay for a writer, an editor, and sometimes a subject expert for accuracy review. Extras can include briefing calls, interview transcription, stock images, or design time.

AI-assisted production changes the mix. Writing time can drop, but you add tool costs and a more disciplined editing and fact-checking step. You still need a human who can judge truth, tone, and intent, because tools can produce confident but wrong claims.

Hidden costs are where teams get surprised. Multiple revision rounds can quietly double the cost of a post, especially when the brief is vague or stakeholders disagree. Delays matter too: if a time-sensitive piece misses the moment, you still paid for it, but it returns less.

Volume changes the math. Human-only teams often scale by hiring, which increases fixed costs and management overhead. AI-assisted workflows can scale faster, but only if quality control scales with them. Otherwise you publish more and fix more.

Quality tradeoffs: what improves and what can slip

Quality isn’t one thing. It usually shows up as accuracy (are the facts right), clarity (is it easy to follow), originality (does it say anything new), usefulness (does it help the reader do something), and voice (does it sound like you).

With AI-assisted production, clarity often improves quickly. You can get cleaner structure, better headings, and tighter paragraphs fast, especially with a clear outline and a few example lines.

The common downside is accuracy. AI drafts can invent details, mix up numbers, or imply a source exists when it doesn’t. Another weak spot is voice: the text can feel generic, like it was written for everyone and no one.

Human-only writing tends to win on judgment. A good writer spots what’s actually true, what’s missing, and what will feel credible to your audience. But human-only has its own risks: inconsistency between writers, rushed editing when deadlines pile up, and burnout that leads to safe, repetitive angles and thinner coverage.

A hybrid process works when you treat AI as a fast first pass and keep humans responsible for truth and tone.

A few safeguards catch most issues without turning the workflow into bureaucracy:

- Facts checklist: mark every claim that needs verification, then confirm it before publishing.

- Source rule: if you can’t back a claim with a real reference you trust, rewrite or remove it.

- Style rules: define a few voice basics (reading level, point of view, do/don’t phrases) and apply them consistently.

- Originality pass: add one specific example, lesson learned, or opinion only your team can provide.

- Final human sign-off: one person owns the final read for accuracy, clarity, and voice.

A practical hybrid workflow (step by step)

Run a small content pilot

Test AI-assisted vs human-only by producing 5 posts and comparing time and edits.

A good hybrid process uses AI for speed and humans for judgment. The goal isn’t to publish the first draft. It’s to reach a strong, accurate piece faster without losing voice or trust.

Start with a brief a stranger could follow: who it’s for, what they should walk away with, and why they should care. Add a few acceptance checks like word range, tone, must-answer questions, and what counts as “good enough.”

Next, generate a couple outline options and choose one strong angle. Tighten it early by removing sections that repeat the same point. This single step prevents bloated drafts later.

Then draft quickly, expecting to rewrite. Let AI produce a full pass, then have a human edit for structure, pacing, and tone. If a paragraph sounds generic, replace it with a concrete detail, a short story, or a clear example.

After that, switch into proof mode. Verify claims, numbers, and definitions. Add one realistic scenario that matches your audience’s day-to-day. Cut filler and any advice that’s too vague to act on.

Finally, do a quality pass with one clear next action for the reader.

Common mistakes and traps to avoid

The biggest risk with AI-assisted content production isn’t “bad writing.” It’s a process that quietly removes responsibility. When nobody owns accuracy and voice, small errors pile up and the content starts to feel generic.

Most rework comes from a few predictable patterns: treating AI output as final, skipping fact checks because it sounds right, optimizing for keywords instead of reader intent, having no single owner for approvals, and using inconsistent prompts so every post sounds different.

Simple guardrails prevent most of this: assign one accountable editor, keep a short prompt template (audience, goal, tone, what to avoid), require quick source checks for challengeable claims, and do a fast reader test (can someone summarize the point after skimming?).

Quick pre-publish checklist

Get new pages indexed faster

Send new pages to search engines using built-in IndexNow support.

Before you hit publish, do one pass that matches real reading behavior: people skim the first screen, scroll fast, and only slow down if you earn it early. Open the draft on mobile and read it once without editing. Notice where you feel confused or bored.

Use these five checks as a final gate, whether the draft was human-only or AI-assisted:

- First-screen clarity: can a reader tell what problem you solve and what they’ll get in the first few lines?

- Careful facts: highlight anything that sounds like a fact (numbers, rankings, “best,” “always,” dates). Confirm it or rewrite it.

- Tone match: do two paragraphs sound like your brand, or like a generic template?

- No padding: cut repeated points, throat-clearing, and extra adjectives.

- Promise vs delivery: does the title and intro match what the section headings actually deliver?

Do one last skim for trust-breakers: inconsistent terms, uneven capitalization, and long paragraphs (more than 3 sentences). If you publish through an API-based workflow, also confirm your title, description, and language metadata match what’s on the page.

Next steps: run a small test and improve the process

Treat this as a pilot, not a permanent switch. A small, controlled test shows the real time, cost, and quality impact for your team and topics.

Pick one content type (for example, how-to blog posts) and run a batch of 5-10 articles. Use the same editor across the batch if possible so the comparison is clean.

Set a baseline from your last human-only posts (hours, cost, time-to-publish, performance after 30 days). Then standardize three things: a brief template, a prompt template, and an editorial checklist. Decide up front what stays human-owned. A simple rule holds up well: humans own the truth and the final say; automation supports speed and consistency.

If you have repeated needs like publishing across multiple pages and languages, tools such as GENERATED can help produce drafts, translations, and supporting elements consistently. The pilot still succeeds or fails on the same thing: clear accountability and reliable checks.

Don’t judge the pilot only by time saved. Look at revision count, factual issues caught, voice consistency, and outcomes like impressions, clicks, and conversions (if relevant). Review monthly, adjust one lever at a time, and give the workflow two or three cycles before you decide what to keep.

FAQ

What counts as “human-only” content writing?

Human-only means a person makes the key writing decisions from start to finish: research, outline, draft, edits, and final checks. Tools can still be used, but the writer and editor stay responsible for what’s published.

What does “AI-assisted content production” actually mean in practice?

AI-assisted usually means a human sets the brief and standards, then uses AI to speed up parts like outlining, drafting, rewrites, or repurposing. A person should still own the final call on facts, tone, and what goes live.

When is human-only the better choice?

Pick human-only when the stakes are high and accuracy or wording risk matters more than speed, like legal pages, sensitive topics, or core positioning. You can still use basic tools, but keep the writing and verification fully human-led.

When does AI-assisted production help the most?

AI helps most when your bottleneck is getting from a blank page to a workable draft, or when you need consistent structure across many posts. It also shines for creating variations like summaries, alternative intros, and format adaptations.

Where does the time go in an AI-assisted workflow?

A common shift is that drafting becomes much faster, but review time becomes more important. You often spend more effort verifying claims, removing generic wording, and making the piece match your brand voice.

Can AI-assisted writing ever take longer than human-only?

It can add time when the topic needs careful sourcing and the AI output includes subtle errors that “sound right.” If you end up rewriting most sections or doing heavy fact-checking, starting from scratch may be faster.

How does AI affect content quality?

Quality can improve quickly in clarity and structure, because you can iterate on phrasing and organization fast. Quality can slip on accuracy and originality, so you need a deliberate pass to verify claims and add specific, real details.

What’s the simplest way to prevent factual mistakes with AI drafts?

Use a simple rule: highlight any claim that could be challenged (numbers, dates, rankings, “best,” “always”), then verify it before publishing. If you can’t confidently back it, rewrite it in a safer, clearer way or remove it.

How do I keep a consistent brand voice with AI-assisted content?

Create a short style guide with a few non-negotiables like reading level, preferred terms, point of view, and phrases to avoid. Then have one accountable editor apply it consistently so posts don’t drift in tone across writers or prompts.

What’s a practical hybrid workflow I can run without making it complicated?

Start with a clear brief, generate an outline and draft, then do a human edit for structure and tone, followed by fact checks and a final sign-off. Keep ownership clear: AI can help with speed, but one person should be responsible for accuracy and the final publish decision.